Develop a Product for EvalAI, an automated platform for evaluating and optimizing Multimodal Large Language Models (LLMs) across text, image, and audio modalities. EvalAI will streamline LLM evaluation without the need for labeled data, reducing manual effort and cost while ensuring high accuracy and fairness.

FEATURES

- Multimodal LLM Evaluation:

- Support for evaluating models across text, images, and audio.

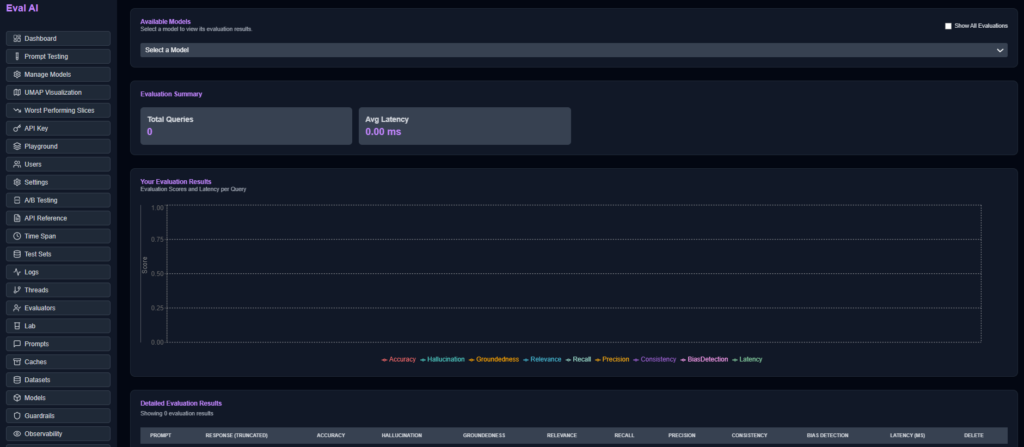

- Use key metrics: Accuracy, Hallucination, Groundedness, Relevance, Recall, Precision, Consistency, and Bias Detection.

- Automated Evaluation Pipeline:

- Automatic detection of biases, hallucinations, and inconsistencies.

- Real-time feedback to support ongoing model optimization.

- No Ground Truth Dependency:

- Enable scalable, flexible evaluations without labeled data, using context-based retrieval to simulate ground truth.

- User-Friendly Dashboard:

- Interactive React dashboard for visual insights into model performance, tracking improvements, and real-time feedback.

- Model Optimization:

- Automated model refinement based on feedback, enabling quick iterations with minimal manual adjustments.

Tech Stack

- Backend: Hugging Face, Qdrant (vector storage), LangChain, Amazon SageMaker.

- Frontend: React for an intuitive dashboard.